You don’t have to be paranoid to know people will lie to get what they want. And you don’t have to be snide to know most people will believe what they want to believe. These are old problems. In Genesis, we read that creation was immediately followed by a lie of cosmic proportions: "Eat the fruit and you will become gods.” Today, the same promise is held out with an Apple logo and a digital avatar.

Or maybe you prefer a more naturalist origin story. It doesn’t really change much about the human essentials. Our grip on reality has always been fragile. Perhaps the first lie ever grunted by a caveman was: "Don’t worry, baby! Of course I’ll be back.” Maybe he left a crude picture of himself scratched on her cavern wall, or scribbled an unflattering cartoon of his mangy rival.

Now in the 21st century, we have the deepfake—a virtual replica of a person’s visage and voice. Artificial intelligence systems have gotten so good at mimicry, we’re on the cusp of having widespread cheap decoys that are indistinguishable from the real thing. A deepfake can be made to say or do anything: Political gaffes. Racist rants. Genocidal threats. Sexual assault. Nasty insults to good friends. Picking boogers and wiping them under a restaurant table. Declaration of nuclear war.

Rather than tell lies about someone, with deepfakes you can show lies about them. For the target, it’s like looking into the mirror and seeing your warped reflection take on a life of its own. It’s the ultimate mockery, enacted with scientific precision.

To create a deepfake, an artificial intelligence system is trained on an individual’s facial expressions and quirky vocal inflections. You just feed the AI as many images of the target as you can gather, or as many voice recordings. With enough data, the system can emulate that person on demand. DeepFaceLab is among the most advanced software for creating audio/visual clones. But there are many others: DeepSwap, Reface AI, Faceswap, on and on.

For an even richer feel, a language model similar to OpenAI’s GPT or Meta’s LLaMA can be trained on the person’s actual words—public statements, private conversations, personal emails, whatever can be collected—so that any fake sentence will capture the target’s psychological profile and personal style.

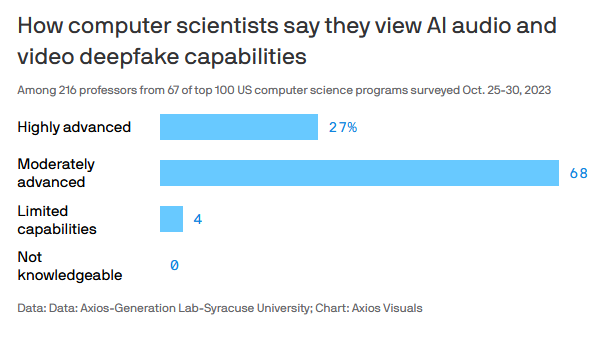

In a recent Axios poll, dozens of professors from top computer science programs said that current AI audio and video deepfake capabilities are "highly advanced,” which isn’t exactly news, but it does convey the ominous mood among some AI experts. "One leading AI architect told us that in private tests, they no longer can distinguish fake from real—which they never thought would be possible so soon.” Axios goes on to warn, "This technology will be available to everyone, including bad international actors, as soon as early 2024.”

Ironically, that will mean the only way to verify any questionable image, video, or audio recording will be to use detection AI. Sentinel software, Intel’s FakeCatcher, Microsoft’s decrepit Video Authenticator, and a ton of other detection apps are already available. The question is whether they can keep up with the increasingly sophisticated deepfake generators—or whether the psychological impact of a well-placed deepfake can be rolled back at all, even after it’s exposed.

Think about the "fine people hoax” used against Donald Trump, or the "surveillance under skin” hoax used against Yuval Noah Harari—both covered and compared in my book, Dark Aeon (ch. 8). Such selectively edited clips are precursors to actual AI-generated deepfakes. It’s easy to think only an idiot believes either of these hoaxes. But these "idiots” include millions of people, many of whom are smarter than I care to admit.

The reality is that an initial false impression carries far more weight than the subsequent correction. This is a primal tendency. Even intelligent people can lose their grip on reality. (See: "War on Terror,” BLM signs, Covid panic, trans flags, etc.)

Of course, a lot of generative AI output is just corporate-approved deepfakery. The latest AI-generated Beatles song is a rubber-stamped deepfake, for instance, or that scene where Egon returned as a nostalgic specter in the newest Ghostbusters movie. But out in the wild, we’re starting to see more and more unapproved deepfakes appearing.

A recent Politico article details two cases where established experts had their personalities scraped from their writing and public statements. Their data was then repackaged as digital replicas. Chinese programmers produced an unauthorized AI bot of the American psychologist Martin Seligman. A tech entrepreneur did the same with the psychotherapist Esther Perel. The purpose is to have a top-notch virtual therapist to talk to.

"Both Seligman and Perel eventually decided to accept the bots rather than challenge their existence,” journalist Mohar Chatterjee writes. "But if they’d wanted to shut down their digital replicas, it’s not clear they would have had a way to do it. Training AI on copyrighted works isn’t actually illegal.”

Set aside the dark implications of mentally unstable people tossing their deepest secrets into the emptiness of a glowing screen, then listening intently as vacuous bots advise them on matters of the soul. It’s worse than that. We’ve reached a point in history where anyone with a sufficient digital footprint can be cloned for any purpose whatsoever.

Naturally, politicians will be primary targets. Last summer, the Ron DeSantis campaign famously used a deepfake of Donald Trump embracing Anthony Fauci. A few months later, the Trump campaign created a goofball ad where digitized GOP candidates introduced themselves as "Ron DeSanctus” from "High Heels University” and Nikki "Bird Brain” Haley from the "Bush War Crime Institute.” The latter are obviously phony—more parody than true deception—but it’s a light foretaste of future fakes.

To be honest, I’m surprised we haven’t seen a flood of sophisticated clones already. Or maybe we have and just don’t realize it. If a deepfake is totally indistinguishable from reality—and can evade all detection software—how would you know it’s fake? For all you know, half of what you see on the internet is fake, or soon will be.

The earliest confirmed clones have been comedic deepfakes and pornographic celebrity face-swaps. With critical elections coming up across the globe, though, I suspect a more destructive wave will come soon enough. Many will be created by foreign powers and deployed to destabilize rivals—bot farms in Russia and China (and elsewhere) slinging defamatory zombies onto social media platforms, as well as clandestine US agencies deploying unnerving deepfakes to disrupt elections, both foreign and domestic.

This leads to two major problems. The first is pervasive digital delusion—what Patrick Wood calls "the collapse of reality,” or Shane Cashman calls "post-reality.” People will wallow in fake worlds populated with fake people who yammer on and on about things unreal. It’ll be like television or social media, but more vivid.

This phony environment will make room for all manner of phony excuses. From now on, whenever a public figure gets busted on a hot mic or hidden camera, be ready to hear "The DEEPFAKE made me do it!”

The second problem is already timeworn. As the average person loses his or her mind in this increasingly chaotic meme storm, corporations and governments will seize more and more control over public narratives. Much like so-called "disinformation,” rampant deepfakes will justify "official truth” on unprecedented scales. And just as we’ve seen with foreign wars, crime statistics, and medical crises, most "official truth” will be carefully crafted hooey—selectively edited slivers of reality that anchor public consciousness to the Current Thing.

Again, these aren’t new problems so much as old problems intensified. Deepfakes are just a high-tech turd on top of a mountain of stale bullshit.

We already have liars who revel in spinning half-truths with forked tongues. We already have news stories filled with carefully crafted inaccuracies. We already have TVs pumping people’s brains full of false advertising and trite propaganda. We already have an internet brimming with made-up personas who tell you exactly what you want to hear.

Humanity’s grip on reality has always been shaky. A total "collapse of reality”—our precipitous descent into "post-reality”—has always been imminent. As independent thinkers, we’ve always been responsible for sifting out the wheat from the chaff. The difference is that this year’s supply of chaff will be orders of magnitude greater than the last.

So keep your eyes peeled and your pitchfork ready. Because as 2024 gets underway, there’s gonna be a historic crop of hooey to huck into the oven. We are hurtling into a mirage inside a matrix, wrapped up in malicious illusions.

DARK ÆON is now for sale here (BookShop), here (Barnes & Noble), or here (Amazon)

If you are Bitcoin savvy and like a good discount, pick up a copy here at Canonic.xyz

Beautiful writing. The thesis logically presented. Entirely frightening. And inspiration to be outdoors with friends much more often.